It is, I suspect, an accident of vertebrate biology that conscious subjects typically come in neat, determinate bundles -- one per vertebrate body, with no overlap. Things might be very different with less neurophysiologically unified octopuses, garden snails, split-brain patients, craniopagus twins, hypothetical conscious computer systems, and maybe some people with "multiple personality" or dissociative identity.

Consider whether the following two principles are true:

Transitivity of Unity: If experience A and experience B are each part of the conscious experience of a single subject at a single time, and if experience B and experience C are each part of the conscious experience of a single subject at a single time, then experience A and experience C are each part of the conscious experience of a single subject at a single time.

Discrete Countability: Except in marginal cases at spatial and temporal boundaries (e.g., someone crossing a threshold into a room), in any spatiotemporal region the number of conscious subjects is always a whole number (0, 1, 2, 3, 4...) -- never a fraction, a negative number, an imaginary number, an indeterminate number, etc.

Leading scientific theories of consciousness, such as

Global Workspace Theory and

Integrated Information Theory are architecturally committed to neat bundles satisfying transitivity of unity and discrete countability. Global Workspace Theories treat processes as conscious if they are available to, or represented in, "the" global workspace (one per conscious animal). Integrated Information Theory contains an "exclusion postulate" according to which conscious systems cannot nest or overlap, and has no way to model partial subjects or indiscrete systems. Most philosophical accounts of the "unity of consciousness" (e.g.

Bayne 2010) also invite commitment to these two theses.

In contrast, Dennett's "

fame in the brain" model of consciousness -- though a close kin to global workspace views -- is compatible with denying transitivity of unity and discrete countability. In Dennett's model, a cognitive process or content is conscious if it is sufficiently "famous" or influential among other cognitive processes. For example, if you're paying close attention to a sharp pain in your toe, the pain process will influence your verbal reports ("that hurts!"), your practical reasoning ("I'd better not kick the wall again"), your planned movements (you'll hobble to protect it), and so on; and conversely, if a slight movement in peripheral vision causes a bit of a response in your visual areas, but you don't and wouldn't report it, act on it, think about it, or do anything differently as a result, it is nonconscious. Fame comes in degrees. Something can be famous to different extents among different groups. And there needn't even be a determinately best way of clustering and counting groups.

[Dall-E's interpretation of a "brain with many processes, some of which are famous"]

Here's a simple model of degrees of fame:

Imagine a million people. Each person has a unique identifier (a number 1-1,000,000), a current state (say, a "temperature" from -10 to +10), and the capacity to represent the states of ten other people (ten ordered pairs, each containing the identifier and temperature of one other person).

If there is one person whose state is represented in every other person, then that person is maximally famous (a fame score of 999,999). If there is one person whose state is represented in no other person, that that person has zero fame. Between these extremes is of course a smooth gradation of cases.

If we analogize to cognitive processes we might imagine the pain in the toe or the flicker in the periphery being just a little famous: Maybe the pain can affect motor planning but not speech, causes a facial expression but doesn't influence the stream of thought you're having about lunch. Maybe the flicker guides a glance and causes a spike of anxiety but has no further downstream effects. Maybe they're briefly reportable but not actually reported, and they have no impact on medium- or long-term memory, or they affect some sorts of memory but not others.

The "ignition" claim of global workspace theory is the empirically defensible (but not decisively established) assertion that there are few such cases of partial fame: Either a cognitive process has very limited effects outside of its functional region or it "ignites" across the whole brain, becoming widely accessible to the full range of influenceable processes. The fame-in-the-brain model enables a different way of thinking that might apply to a wider range of cognitive architectures.

#

We might also extend the fame model to issues of unity and the individuation of conscious subjects.

Start with a simple case: the same setup as before, but with two million people and the following constraint: Processes numbered 1 to 1,000,000 can only represent the states of other processes in that same group of 1 to 1,000,000; and processes numbered 1,000,001 to 2,000,000 can only represent the states of other processes in that group. The fame groups are disjoint, as if on different planets. Adapted to the case of experiences: Only you can feel your pain and see your peripheral flicker (if anyone does), and only I can feel my pain and see my peripheral flicker (if anyone does).

This disjointedness is what makes the two conscious subjects distinct from each other. But of course, we can imagine less disjointedness. If we eliminate disjointedness entirely, so that processes numbered 1 to 2,000,000 can each represent the states of any process from 1 to 2,000,000, then our two subjects become one. The planets are entirely networked together. But partial disjointedness is also possible: Maybe processes can represent the states of anyone within 1,000,000 of their own number (call this the Within a Million case). Or maybe processes numbered 950,001 to 1,050,000 can be represented by any process from 1 to 2,000,000 but every process below 950,001 can only be represented by processes 1 to 1,050,000 and every process above 1,050,000 can only be represented by processes 950,001 to 2,000,000 (call this the Overlap case).

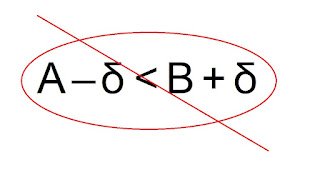

The Overlap case might be thought of as two discrete subjects with an overlapping part. Subject A (1 to 1,050,000) and Subject B (950,001 to 2,000,000) each have their private experiences, but there are also some shared experiences (whenever processes 950,001 to 1,050,000 become sufficiently famous in the range constituting each subject). Transitivity of Unity thus fails: Subject A experiences, say, a taste of a cookie (process 312,421 becoming famous across processes 1 - 1,050,000) and simultaneously a sound of a bell (process 1,000,020 becoming famous across processes 1 - 1,050,000); while Subject B experiences that same sound of a bell alongside the sight of an airplane (both of those processes being famous across processes 950,001 - 2,000,000). Cookie and bell are unified in A. Bell and airplane are unified in B. But no subject experiences the cookie and airplane simultaneously.

In the Overlap case, discrete countability is arguably preserved, since it's plausible to say there are exactly two subjects of experience. But it's more difficult to retain Discrete Countability in the Within a Million case. There, if we want to count each distinct fame group as a separate subject, we will end up with a million different subjects: Subject 1 (1 to 1,000,001), Subject 2 (1 to 1,000,002), Subject 3 (1 to 1,000,003) ... Subject 1,000,001 (1 to 2,000,000), Subject 1,000,002 (2 to 2,000,000), ... Subject 1,999,999 (999,999 to 2,000,000), Subject 2,000,000 (1,000,000 - 2,000,000). (There needn't be a middle subject with access to every process: Simply extend the case up to 3,000,000 processes.) While we could say there would be two million discrete subjects in such an architecture, I see at least three infelicities:

First, person 1,000,002 might never be famous -- maybe even could never be famous, being just a low-level consumer whose destiny is to only to make others famous. If so, Subject 1 and Subject 2 would always have, perhaps even necessarily would always have, exactly the same experiences in almost exactly the same physical substrate, despite being, supposedly, discrete subjects. That is, at least, a bit of an odd result.

Second, it becomes too easy to multiply subjects. You might have thought, based on the other cases, that a million processes is what it takes to generate a human subject, and that with two million processes you get either two human subjects or one large subject. But now it seems that, simply by linking those two million processes together by a different principle (with about 1.5 times as many total connections), you can generate not just two but a full two million human subjects. It turns out to be surprisingly cheap to create a plethora of discretely different subjective centers of experience.

Third, the model I've presented is simplified in a certain way: It assumes that there are two million discrete, countable processes that could potentially be famous or create fame in others by representing them. But cognitive processes might not in fact be discrete and countable in this way. They might be more like swirls and eddies in a turbulent stream, and every attempt to give them sharp boundaries and distinct labels might to some extent be only a simplified model of a messy continuum. If so, then our two million discrete subjects would itself be a simplified model of a messy continuum of overlapping subjectivities.

The Within a Million case then, might be best conceptualized not as a case of one subject of experience, nor two, nor two million, but rather a case that defies any such simple numerical description, contra Discrete Countability.

#

This is abstract and far-fetched, of course. But once we have stretched our minds in this way, it becomes, I think, easier to conceive of the possibility that some real cases (cognitively partly disunified mollusks, for example, or people with unusual conditions or brain structures, or future conscious computer systems) might defy transitivity of unity and discrete countability.

What would it be like to be such an entity / pair of entities / diffuse-bordered-uncountable-groupish thing? Unsurprisingly, we might find such forms of consciousness difficult to imagine with our ordinary vertebrate concepts and philosophical tools derived from our particular psychology.